SinDDM: A Single Image Denoising Diffusion Model

ICML 2023

| Vladimir Kulikov | Shahar Yadin | Matan Kleiner | Tomer Michaeli |

|

Technion - Israel Institute of Technology |

| [Paper] | [Supplementary] | [Proceedings] | [Code] |

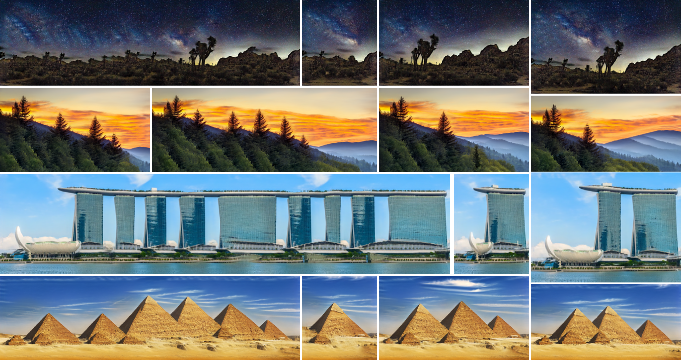

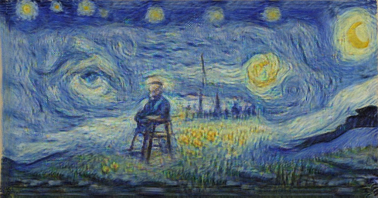

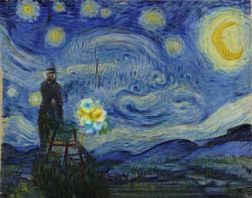

| Training Image | Random Samples |

|

|

Abstract

Denoising diffusion models (DDMs) have led to staggering performance leaps in image generation, editing and restoration. However, existing DDMs use very large datasets for training. Here, we introduce a framework for training a DDM on a single image. Our method, which we coin SinDDM, learns the internal statistics of the training image by using a multi-scale diffusion process. To drive the reverse diffusion process, we use a fully-convolutional light-weight denoiser, which is conditioned on both the noise level and the scale. This architecture allows generating samples of arbitrary dimensions, in a coarse-to-fine manner. As we illustrate, SinDDM generates diverse high-quality samples, and is applicable in a wide array of tasks, including style transfer and harmonization. Furthermore, it can be easily guided by external supervision. Particularly, we demonstrate text-guided generation from a single image using a pre-trained CLIP model.

Talk

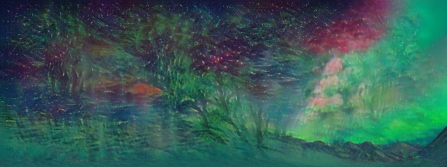

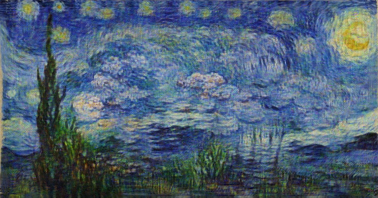

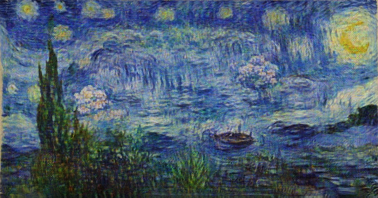

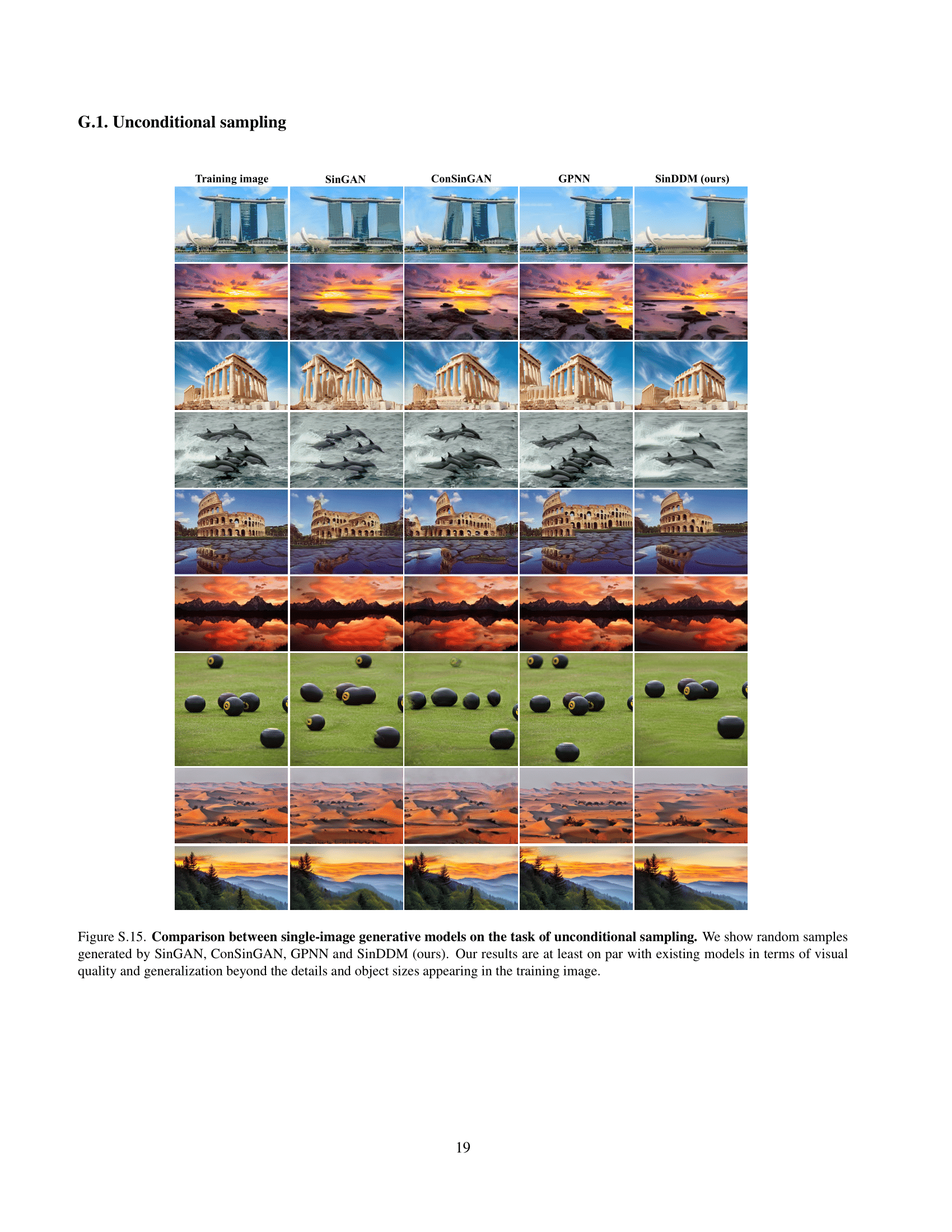

Image Generation with Text-Guided Style

SinDDM can control the style of the generated samples by providing a text prompt in the form of "X Style" (e.g. "Van Gogh Style"). This is achieved by applying CLIP guidance only in the finest scale.

| Training Image | "Monet Style" | ||

|

|

|

|

|

|

|

|

|

|

|

|

| "Van Gogh Style" | |||

|

|

|

|

|

|

|

|

|

|

|

|

Below are a few more examples, where the samples are in the dimensions of the training image. For each of the images below you can choose a style from the list and see a corresponding generated sample.

Text-Guided Style Transfer

Rather than controlling the style of the random samples generated by our model, we can also use SinDDM to modify the style of the training image itself. We achieve this by injecting the training image directly to the finest scale so that the modifications imposed by our denoiser and by the CLIP guidance only affect the fine textures. This leads to a style-transfer effect, but where the style is dictated by a text prompt rather than by an example style image. For each of the images below you can choose a style from the list and see the results.

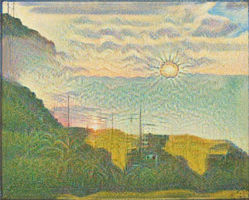

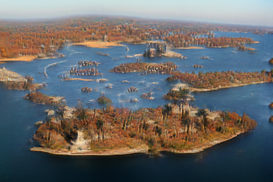

Image Generation with Text-Guided Contents

Besides style, the user can also control the contents of the generated samples using a text prompt. Here again we use CLIP's external knowledge to guide the image generation process, but as opposed to the style guidance setting, here we employ CLIP also in coarser scales.

| Training Image | "Matterhorn Mountain" | |||

|

|

|

|

|

| "Van Gogh" | ||||

|

|

|

|

|

| "a fire in the forest" | ||||

|

|

|

|

|

| "Camelot" | ||||

|

|

|

|

|

| "sand castle" | ||||

|

|

|

|

|

| "stars constellation in the night sky" | ||||

|

|

|

|

|

| "mesoamerican pyramids" | ||||

|

|

|

|

|

| "sunset" | ||||

|

|

|

|

|

| "oasis" | ||||

|

|

|

|

|

| "Grand Canyon" | ||||

|

|

|

|

|

Image Generation with Text-Guided Content in ROI

Instead of guiding the generation of the entire image, we can also confine the modifications to a user prescribed region of interest.

| Training Image | Input | Output | ||

|

"cracks" |

|

||

|

"clouds" |

|

Generation guided by image contents within ROIs

SinDDM is able to generate images with user-prescribed contents within several ROIs. The rest of the image is generated randomly but coherently around those constrains.

Training Image

ROI Guidance

Random Samples

|

SinDDM: A Single Image Denoising Diffusion Model. Vladimir Kulikov, Shahar Yadin, Matan Kleiner, Tomer Michaeli. [Proceedings] [Arxiv] [Paper] |

Bibtex

|

More results, comparison to other methods and further discussion about our model can be found in the supplementary material. Our official code implementation can be found in the official SinDDM github repository. |

|

|

| [Supplementary] | [Code] |

AcknowledgementsWe thank Hila Manor for the help with this website. A lot of features are taken from bootstrap. All icons are taken from font awesome. |